Training of Document Categorizer using Maximum Entropy Model in OpenNLP

In this tutorial, we shall learn the Training of Document Categorizer using Maximum Entropy Model in OpenNLP.

Document Categorizing is requirement based task. Hence there is no pre-built model for this problem of natural language processing in Apache openNLP.

In this tutorial, we shall train the Document Categorizer to classify two categories : Thriller, Romantic. The categories chosen are movie generes. The data for each document is the plot of the movie.

Steps for Training of Document Categorizer

Following are the steps to train Document Categorizer that uses Maxent( or Maximum Entropy) mechanism for creating a Model :

Step 1 : Prepare the training data.

The training data file should contain an example for each observation or document with the format : Category followed by data of document, seperated by space.

For example, consider the below line which is from the training file.

en-movie-category.train

Thriller John Hannibal Smith Liam Neeson is held captive in Mexicowhere

- Category is “Thriller”

- Data of the document is “John Hannibal Smith Liam Neeson is held captive in Mexico”.

Find the complete training file used in the example, here en-movie-category.

Step 2 : Read the training data file.

InputStreamFactory dataIn = new MarkableFileInputStreamFactory(new File("train"+File.separator+"en-movie-category.train"));

ObjectStream lineStream = new PlainTextByLineStream(dataIn, "UTF-8");

ObjectStream sampleStream = new DocumentSampleStream(lineStream);Step 3 : Define the training parameters.

TrainingParameters params = new TrainingParameters();

params.put(TrainingParameters.ITERATIONS_PARAM, 10+"");

params.put(TrainingParameters.CUTOFF_PARAM, 0+"");Step 4 : Train and create a model from the read training data and defined training parameters.

DoccatModel model = DocumentCategorizerME.train("en", sampleStream, params, new DoccatFactory());Step 5 : Save the newly trained model to a local file, which can be used later for predicting movie genre.

BufferedOutputStream modelOut = new BufferedOutputStream(new FileOutputStream("model"+File.separator+"en-movie-classifier-maxent.bin"));

model.serialize(modelOut);Step 6 : Test the model for a sample string and print the probabilities for the string to belong to different categories. The method DocumentCategorizer.categorize(String[] wordsOfDoc) takes an array of Strings which are words of the document as argument.

DocumentCategorizer doccat = new DocumentCategorizerME(model);

double[] aProbs = doccat.categorize("Afterwards Stuart and Charlie notice Kate in the photos Stuart took at Leopolds ball and realize that her destiny must be to go back and be with Leopold That night while Kate is accepting her promotion at a company banquet he and Charlie race to meet her and show her the pictures Kate initially rejects their overtures and goes on to give her acceptance speech but it is there that she sees Stuarts picture and realizes that she truly wants to be with Leopold".replaceAll("[^A-Za-z]", " ").split(" "));The complete program is shown in the following.

DocClassificationMaxentTrainer.java

import java.io.BufferedOutputStream;

import java.io.File;

import java.io.FileOutputStream;

import java.io.IOException;

import opennlp.tools.doccat.DoccatFactory;

import opennlp.tools.doccat.DoccatModel;

import opennlp.tools.doccat.DocumentCategorizer;

import opennlp.tools.doccat.DocumentCategorizerME;

import opennlp.tools.doccat.DocumentSample;

import opennlp.tools.doccat.DocumentSampleStream;

import opennlp.tools.util.InputStreamFactory;

import opennlp.tools.util.MarkableFileInputStreamFactory;

import opennlp.tools.util.ObjectStream;

import opennlp.tools.util.PlainTextByLineStream;

import opennlp.tools.util.TrainingParameters;

/**

* oepnnlp version 1.7.2

* Training of Document Categorizer using Maximum Entropy Model in OpenNLP

* @author www.tutorialkart.com

*/

public class DocClassificationMaxentTrainer {

public static void main(String[] args) {

try {

// read the training data

InputStreamFactory dataIn = new MarkableFileInputStreamFactory(new File("train"+File.separator+"en-movie-category.train"));

ObjectStream lineStream = new PlainTextByLineStream(dataIn, "UTF-8");

ObjectStream sampleStream = new DocumentSampleStream(lineStream);

// define the training parameters

TrainingParameters params = new TrainingParameters();

params.put(TrainingParameters.ITERATIONS_PARAM, 10+"");

params.put(TrainingParameters.CUTOFF_PARAM, 0+"");

// create a model from traning data

DoccatModel model = DocumentCategorizerME.train("en", sampleStream, params, new DoccatFactory());

System.out.println("\nModel is successfully trained.");

// save the model to local

BufferedOutputStream modelOut = new BufferedOutputStream(new FileOutputStream("model"+File.separator+"en-movie-classifier-maxent.bin"));

model.serialize(modelOut);

System.out.println("\nTrained Model is saved locally at : "+"model"+File.separator+"en-movie-classifier-maxent.bin");

// test the model file by subjecting it to prediction

DocumentCategorizer doccat = new DocumentCategorizerME(model);

String[] docWords = "Afterwards Stuart and Charlie notice Kate in the photos Stuart took at Leopolds ball and realise that her destiny must be to go back and be with Leopold That night while Kate is accepting her promotion at a company banquet he and Charlie race to meet her and show her the pictures Kate initially rejects their overtures and goes on to give her acceptance speech but it is there that she sees Stuarts picture and realises that she truly wants to be with Leopold".replaceAll("[^A-Za-z]", " ").split(" ");

double[] aProbs = doccat.categorize(docWords);

// print the probabilities of the categories

System.out.println("\n---------------------------------\nCategory : Probability\n---------------------------------");

for(int i=0;i<doccat.getNumberOfCategories();i++){

System.out.println(doccat.getCategory(i)+" : "+aProbs[i]);

}

System.out.println("---------------------------------");

System.out.println("\n"+doccat.getBestCategory(aProbs)+" : is the predicted category for the given sentence.");

}

catch (IOException e) {

System.out.println("An exception in reading the training file. Please check.");

e.printStackTrace();

}

}

}

When the above program is run, the output to the console is as shown below :

Indexing events using cutoff of 0

Computing event counts... done. 66 events

Indexing... done.

Sorting and merging events... done. Reduced 66 events to 66.

Done indexing.

Incorporating indexed data for training...

done.

Number of Event Tokens: 66

Number of Outcomes: 2

Number of Predicates: 6886

...done.

Computing model parameters ...

Performing 10 iterations.

1: ... loglikelihood=-45.747713916956386 0.4090909090909091

2: ... loglikelihood=-41.65758235918323 1.0

3: ... loglikelihood=-38.24560021570176 1.0

4: ... loglikelihood=-35.34031906559529 1.0

5: ... loglikelihood=-32.832760472542496 1.0

6: ... loglikelihood=-30.646350698439953 1.0

7: ... loglikelihood=-28.72390702819924 1.0

8: ... loglikelihood=-27.02122456238792 1.0

9: ... loglikelihood=-25.50340047819185 1.0

10: ... loglikelihood=-24.142465730604112 1.0

Model is successfully generated.

Model is saved locally at : model/en-movie-classifier-maxent.bin

---------------------------------

Category : Probability

---------------------------------

Thriller : 0.47150150747178926

Romantic : 0.5284984925282107

---------------------------------

Romantic : is the predicted category for the given sentence.

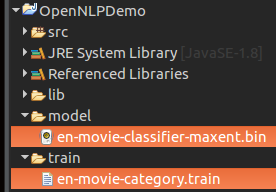

The location of the training file and the locally saved model file are shown in the following picture.

Conclusion

In this Apache OpenNLP Tutorial, we have learnt briefly the training input requirements for Document Categorizer API of OpenNLP and also learnt the example program for Training of Document Categorizer using Maximum Entropy Model in OpenNLP.