Apache Spark MLlib Tutorial – Learn about Spark’s Scalable Machine Learning Library

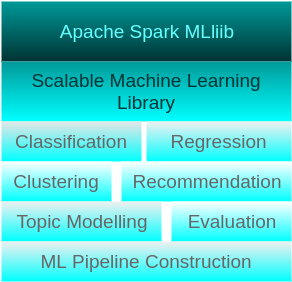

MLlib is one of the four Apache Spark‘s libraries. It is a scalable Machine Learning Library.

Programming

MLlib could be developed using Java (Spark’s APIs).

With latest Spark releases, MLlib is inter-operable with Python’s Numpy libraries and R libraries.

Data Source

Using MLlib, one can access HDFS(Hadoop Data File System) and HBase, in addition to local files. This enables MLlib to be easily plugged into Hadoop workflows.

Performance

Spark’s framework excels at iterative computation. This enables the iterative parts of MLlib algorithms to run fast. And also MLlib contains high quality algorithms for Classification, Regression, Recommendation, Clustering, Topic Modelling, etc.

Following are some of the examples to MLlib algorithms, with step by step understanding of ML Pipeline construction and model building :

- Classification using Logistic Regression

- Classification using Naive Bayes

- Generalized Regression

- Survival Regression

- Decision Trees

- Random Forests

- Gradient Boosted Trees

- Recommendation using Alternating Least Squares (ALS)

- Clustering using KMeans

- Clustering using Gaussian Mixtures

- Topic Modelling using Latent Dirichlet Conditions

- Frequent Itemsets

- Association Rules

- Sequential Pattern Mining

MLlib Utilities

MLlib provides following workflow utilities :

- Feature Transformation

- ML Pipeline construction

- Model Evaluation

- Hyper-parameter tuning

- Saving and loading of models and pipelines

- Distributed Linear Algebra

- Statistics

Conclusion

In this Apache Spark Tutorial – Spark MLlib Tutorial, we have learnt about different machine learning algorithms available in Spark MLlib and different utilities MLlib provides.