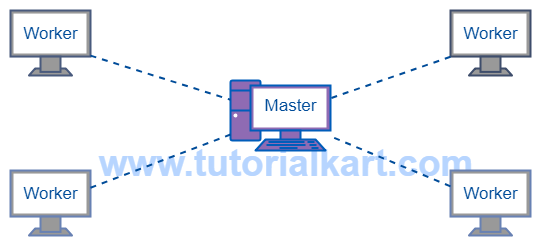

Apache Spark can be configured to run as a master node or slate node. In this tutorial, we shall learn to setup an Apache Spark Cluster with a master node and multiple slave(worker) nodes. You can setup a computer running Windows/Linux/MacOS as a master or slave.

Setup an Apache Spark Cluster

To Setup an Apache Spark Cluster, we need to know two things :

- Setup master node

- Setup worker node.

Setup Spark Master Node

Following is a step by step guide to setup Master node for an Apache Spark cluster. Execute the following steps on the node, which you want to be a Master.

1. Navigate to Spark Configuration Directory.

Go to SPARK_HOME/conf/ directory.

SPARK_HOME is the complete path to root directory of Apache Spark in your computer.

2. Edit the file spark-env.sh – Set SPARK_MASTER_HOST.

Note : If spark-env.sh is not present, spark-env.sh.template would be present. Make a copy of spark-env.sh.template with name spark-env.sh and add/edit the field SPARK_MASTER_HOST. Part of the file with SPARK_MASTER_HOST addition is shown below:

spark-env.sh

# Options for the daemons used in the standalone deploy mode

# - SPARK_MASTER_HOST, to bind the master to a different IP address or hostname

SPARK_MASTER_HOST='192.168.0.102'

# - SPARK_MASTER_PORT / SPARK_MASTER_WEBUI_PORT, to use non-default ports for the masterReplace the ip with the ip address assigned to your computer (which you would like to make as a master).

3. Start spark as master.

Goto SPARK_HOME/sbin and execute the following command.

$ ./start-master.sh~$ ./start-master.sh

starting org.apache.spark.deploy.master.Master, logging to /usr/lib/spark/logs/spark-arjun-org.apache.spark.deploy.master.Master-1-arjun-VPCEH26EN.out4. Verify the log file.

You would see the following in the log file, specifying ip address of the master node, the port on which spark has been started, port number on which WEB UI has been started, etc.

Spark Command: /usr/lib/jvm/default-java/jre/bin/java -cp /usr/lib/spark/conf/:/usr/lib/spark/jars/* -Xmx1g org.apache.spark.deploy.master.Master --host 192.168.0.102 --port 7077 --webui-port 8080

========================================

Using Sparks default log4j profile: org/apache/spark/log4j-defaults.properties

17/08/09 14:09:16 INFO Master: Started daemon with process name: 7715@arjun-VPCEH26EN

17/08/09 14:09:16 INFO SignalUtils: Registered signal handler for TERM

17/08/09 14:09:16 INFO SignalUtils: Registered signal handler for HUP

17/08/09 14:09:16 INFO SignalUtils: Registered signal handler for INT

17/08/09 14:09:16 WARN Utils: Your hostname, arjun-VPCEH26EN resolves to a loopback address: 127.0.1.1; using 192.168.0.102 instead (on interface wlp7s0)

17/08/09 14:09:16 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address

17/08/09 14:09:17 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

17/08/09 14:09:17 INFO SecurityManager: Changing view acls to: arjun

17/08/09 14:09:17 INFO SecurityManager: Changing modify acls to: arjun

17/08/09 14:09:17 INFO SecurityManager: Changing view acls groups to:

17/08/09 14:09:17 INFO SecurityManager: Changing modify acls groups to:

17/08/09 14:09:17 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(arjun); groups with view permissions: Set(); users with modify permissions: Set(arjun); groups with modify permissions: Set()

17/08/09 14:09:17 INFO Utils: Successfully started service 'sparkMaster' on port 7077.

17/08/09 14:09:17 INFO Master: Starting Spark master at spark://192.168.0.102:7077

17/08/09 14:09:17 INFO Master: Running Spark version 2.2.0

17/08/09 14:09:18 WARN Utils: Service 'MasterUI' could not bind on port 8080. Attempting port 8081.

17/08/09 14:09:18 INFO Utils: Successfully started service 'MasterUI' on port 8081.

17/08/09 14:09:18 INFO MasterWebUI: Bound MasterWebUI to 0.0.0.0, and started at http://192.168.0.102:8081

17/08/09 14:09:18 INFO Utils: Successfully started service on port 6066.

17/08/09 14:09:18 INFO StandaloneRestServer: Started REST server for submitting applications on port 6066

17/08/09 14:09:18 INFO Master: I have been elected leader! New state: ALIVESetting up Master Node is complete.

Setup Spark Slave(Worker) Node

Following is a step by step guide to setup Slave(Worker) node for an Apache Spark cluster. Execute the following steps on all of the nodes, which you want to be as worker nodes.

1. Navigate to Spark Configuration Directory.

Go to SPARK_HOME/conf/ directory.

SPARK_HOME is the complete path to root directory of Apache Spark in your computer.

2. Edit the file spark-env.sh – Set SPARK_MASTER_HOST.

Note : If spark-env.sh is not present, spark-env.sh.template would be present. Make a copy of spark-env.sh.template with name spark-env.sh and add/edit the field SPARK_MASTER_HOST. Part of the file with SPARK_MASTER_HOST addition is shown below:

spark-env.sh

# Options for the daemons used in the standalone deploy mode

# - SPARK_MASTER_HOST, to bind the master to a different IP address or hostname

SPARK_MASTER_HOST='192.168.0.102'

# - SPARK_MASTER_PORT / SPARK_MASTER_WEBUI_PORT, to use non-default ports for the masterReplace the ip with the ip address assigned to your master (that you used in setting up master node).

3. Start spark as slave.

Goto SPARK_HOME/sbin and execute the following command.

$ ./start-slave.sh spark://<your.master.ip.address>:7077apples-MacBook-Pro:sbin John$ ./start-slave.sh spark://192.168.0.102:7077

starting org.apache.spark.deploy.worker.Worker, logging to /usr/local/Cellar/apache-spark/2.2.0/libexec/logs/spark-John-org.apache.spark.deploy.worker.Worker-1-apples-MacBook-Pro.local.out4. Verify the log.

You would find in the log that this Worker node has been successfully registered with master running at spark://192.168.0.102:7077 on the network.

Spark Command: /Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/bin/java -cp /usr/local/Cellar/apache-spark/2.2.0/libexec/conf/:/usr/local/Cellar/apache-spark/2.2.0/libexec/jars/* -Xmx1g org.apache.spark.deploy.worker.Worker --webui-port 8081 spark://192.168.0.102:7077

========================================

Using Sparks default log4j profile: org/apache/spark/log4j-defaults.properties

17/08/09 14:12:55 INFO Worker: Started daemon with process name: 7345@apples-MacBook-Pro.local

17/08/09 14:12:55 INFO SignalUtils: Registered signal handler for TERM

17/08/09 14:12:55 INFO SignalUtils: Registered signal handler for HUP

17/08/09 14:12:55 INFO SignalUtils: Registered signal handler for INT

17/08/09 14:12:56 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

17/08/09 14:12:56 INFO SecurityManager: Changing view acls to: John

17/08/09 14:12:56 INFO SecurityManager: Changing modify acls to: John

17/08/09 14:12:56 INFO SecurityManager: Changing view acls groups to:

17/08/09 14:12:56 INFO SecurityManager: Changing modify acls groups to:

17/08/09 14:12:56 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(John); groups with view permissions: Set(); users with modify permissions: Set(John); groups with modify permissions: Set()

17/08/09 14:12:56 INFO Utils: Successfully started service 'sparkWorker' on port 58156.

17/08/09 14:12:57 INFO Worker: Starting Spark worker 192.168.0.100:58156 with 4 cores, 7.0 GB RAM

17/08/09 14:12:57 INFO Worker: Running Spark version 2.2.0

17/08/09 14:12:57 INFO Worker: Spark home: /usr/local/Cellar/apache-spark/2.2.0/libexec

17/08/09 14:12:57 INFO Utils: Successfully started service 'WorkerUI' on port 8081.

17/08/09 14:12:57 INFO WorkerWebUI: Bound WorkerWebUI to 0.0.0.0, and started at http://192.168.0.100:8081

17/08/09 14:12:57 INFO Worker: Connecting to master 192.168.0.102:7077...

17/08/09 14:12:57 INFO TransportClientFactory: Successfully created connection to /192.168.0.102:7077 after 57 ms (0 ms spent in bootstraps)

17/08/09 14:12:57 INFO Worker: Successfully registered with master spark://192.168.0.102:7077The setup of Worker node is successful.

Multiple Spark Worker Nodes

To add more worker nodes to the Apache Spark cluster, you may just repeat the process of worker setup on other nodes as well.

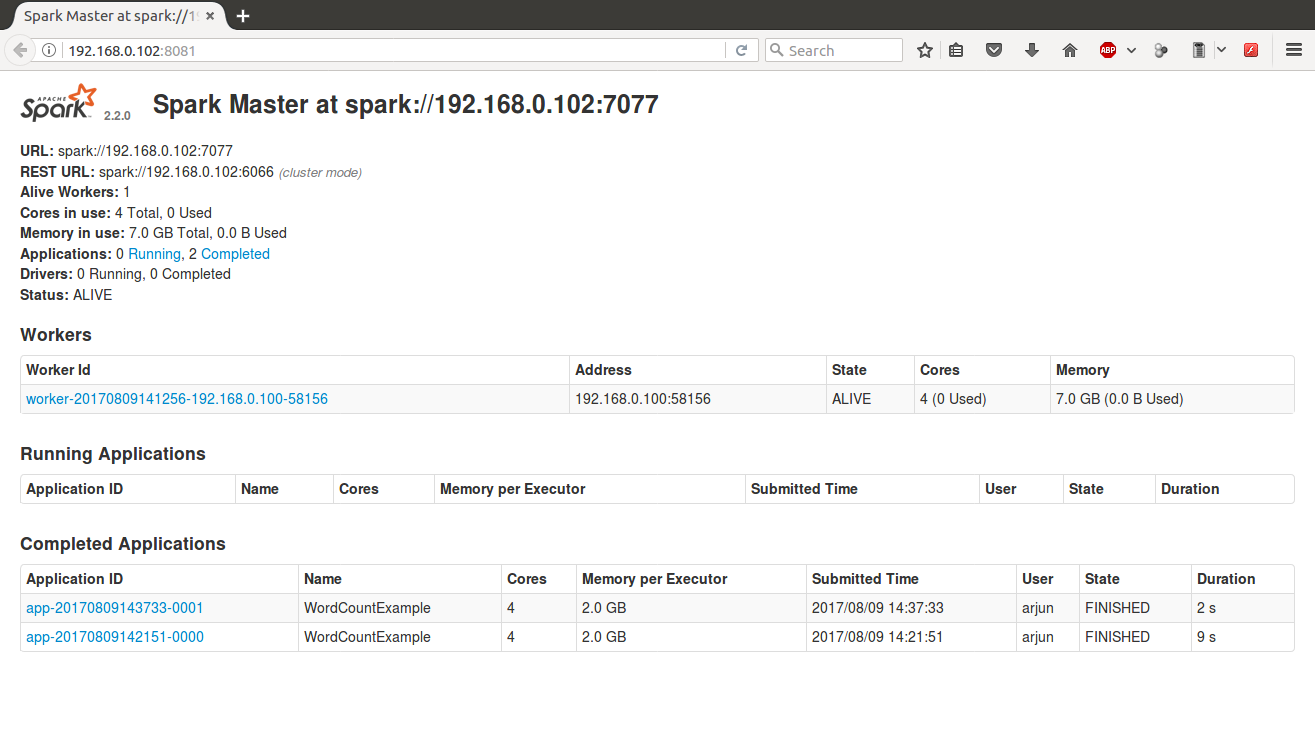

Once you have added some slaves to the cluster, you can view the workers connected to the master via Master WEB UI.

Hit the url http://<your.master.ip.address>:<web-ui-port-number>/ (example is http://192.168.0.102:8081/) in browser. Following would be the output with slaves connected listed under Workers.

Conclusion

In this Apache Spark Tutorial, we have successfully setup a master node and multiple worker nodes, thus an Apache Spark cluster. In our next tutorial we shall learn to configure spark ecosystem.